I still got the title error - I don’t get it

I even changed the og:title tag to match, but still got the error

Any ideas?

I still got the title error - I don’t get it

I even changed the og:title tag to match, but still got the error

Any ideas?

How do you manually exclude specific URLs from the sitemap Scrutiny builds? For example, Scrutiny finds my custom 404 pages, but 404 pages should not be included in a sitemap. Why? Because Sitemaps aid search engine bots in indexing content you want displayed in a search. You don’t want your custom 404 page to appear in a Google search, hence it should be excluded.

Currently, I use Blocs-generated sitemaps. Since I build my English & Japanese pages in two separate documents, the main sitemap.xml used at the root of my domain excludes the /en (English) URLs. I then put a different sitemap.xml at the /en directory root to list out the English pages only, excluding the Japanese. Not sure if this is the best way to do it for SEO, but that’s the way I’ve been doing it for a long time. If anyone has a source to show why doing it that way is bad, I would like to hear from you.

Next…

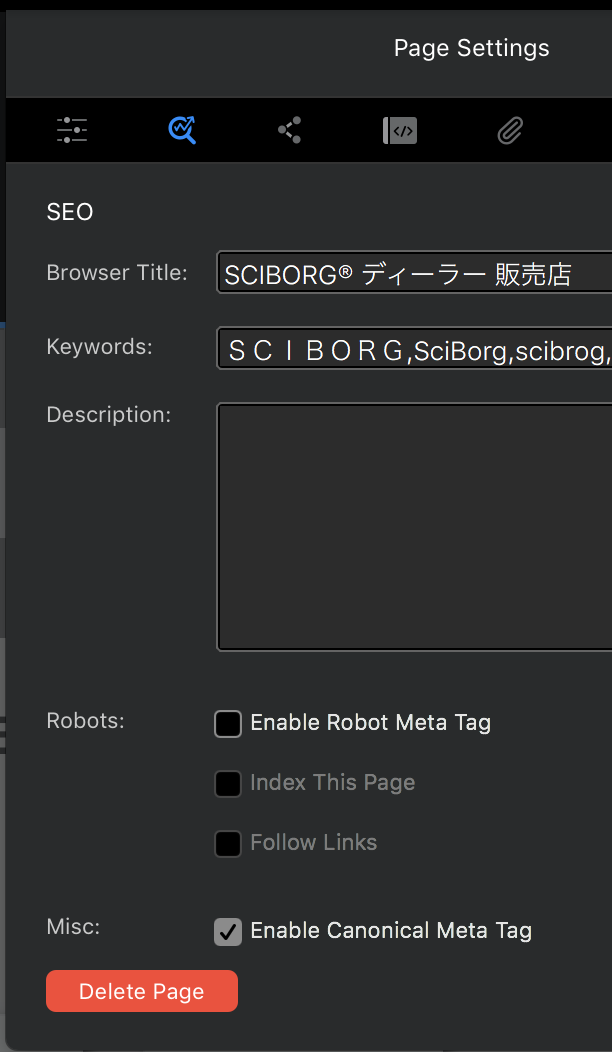

Canonical is an important topic of consideration seeing Blocs 4.0.0 has added that to Page Settings > SEO…

For a long time in Blocs, I have manually added <link rel="canonical" href="https://mysite.com"> in Page Settings > Code > Header. (Where “mysite” is just a dummy example URL.) Blocs 4.0.0 makes it more automatic if you tick that checkbox, but I currently only use a Canonical tag on the top/home page of each of my sites. Since I have Japanese (primary language) & English version pages, I use a “canonical” tag on “https://mysite.com” & “https://mysite.com/en”, for example. Since I do not use a CMS or otherwise use “parameters,” I do not use Canonical on other pages within my site. I then use .htaccess to keep the “www.” out of my URLs and to redirect http to https.

I found this page to be a good resource explaining Canonical. And this page says that Google OK’s Canonical for use on ALL pages on a site, but they also say that such is not necessary (on pages that don’t use parameters).

Lastly…

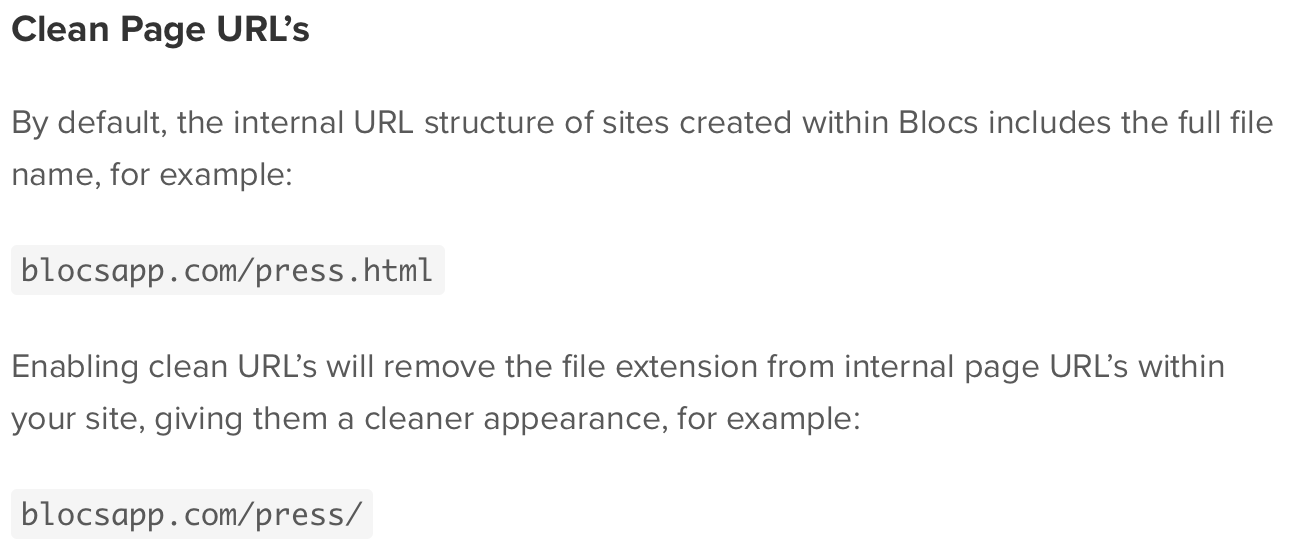

I’ve been too busy to use Clean URLs or even to study up on them in-depth. From what I understand, enabling Clean URLs would chop off .php or .html at the end of a URL in the browser using… what? Javascript? Again, I don’t use a CMS or parameters, so I don’t see a need to enable that, personally. If someone can argue what it is necessary, I’m all ears.

I’m also a bit confused by what’s written in the Knowledgebase here:

/press.html is not the same as /press/ ! The latter is a directory. The former is a web page.

Thanks.

Technically yes, but it doesn’t work that way. Typically a URL that doesn’t specify a file will return an index file.

Hence why /press/ is the same as /press/index.html and is how the clean URLs in this case work.

Hi JDW, I’m the developer of Scrutiny, I’m always keen to answer questions or look at issues. I’d welcome anything like this via the support form.

You shouldn’t need to manually exclude a 404 page, Scrutiny certainly should exclude urls with 4xx and 5xx status codes the sitemap, for the reason you’ve given. (Any links to the page should be in the Link results, but the page shouldn’t appear in the Sitemap table.) Is your page a ‘soft 404’? ie one which returns a 200 code but says ‘page not found’? If so then you can use Scrutiny’s soft 404 feature so that a link to it is marked as a bad link and it’s excluded from the sitemap. There are two other workarounds. One is to give your custom 404 page a robots noindex meta tag (which might be a good thing to do anyway). The other is to give it a canonical pointing to a different url than its own. Both of these things exclude a page from the sitemap.

To answer the question directly, there’s currently not a way to manually exclude a url or urls (other than by excluding the page or pages from the scan). I’ve never considered this; it hasn’t been requested and I’ve not had a need to do it. I’ll treat that as an enhancement request; there’s already a ‘sitemap rules’ table, the option might neatly fit in there.

If your page is really giving a 404 response code and it’s appearing in the sitemap then it shouldn’t be and I’d consider that a bug. I’ll test this today. If you can give me a specific example, that would be helpful.

I hope that helps.

Perfect, thank you for the excellent tip!

Hi @JDW, funny at the same time you asked in your mail for the possibility to exclude pages from the sitemap, i just send the same request to norm, I use a volt-cms a lot, and want the loginpage also excluded it from the sitemap.xml. we wait for @Norm to answer.

My only question remaining then is this (not related to Scrutiny)…

Currently, I use Blocs-generated sitemaps. Since I build my English & Japanese pages in two separate documents, the main sitemap.xml used at the root of my domain excludes the /en (English) URLs. I then put a different sitemap.xml at the /en directory root to list out the English pages only, excluding the Japanese. Not sure if this is the best way to do it for SEO, but that’s the way I’ve been doing it for a long time. If anyone has a source to show why doing it that way is bad, I would like to hear from you.

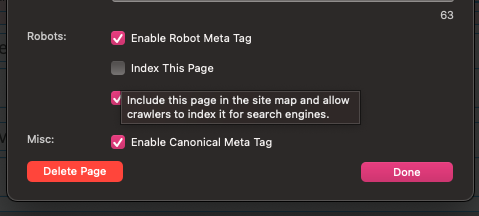

To exclude a page from the site map un-tick the “Index this page” option in page settings under SEO

I should mentioned for everyone who doesn’t already know that I’ve been playing with the Scrutiny Trial, which lasts 30 days (no exporting). It’s currently on sale for $86.25, but I guess that sale will end in a matter of hours? Here’s the product web page:

Thats the beauty of this fantastic Forum.

First of all I’ll assume we are now talking exclusively about Blocs 4. So much has changed since the last post around 18 months ago that it might have been better to create a new topic.

Having only ever built sites in English I sometimes struggle with your queries that involve multilingual issues, because it’s beyond my direct experience. The developer of Scrutiny is actually a forum member and I see she has already replied. In the past @peahen has made changes to Scrutiny specifically to help Blocs users, so she is a valuable asset to have on the forum.

I believe the sitemaps in Blocs 4 have become more detailed than before if you want to try that, however I also use Scrutiny for troubleshooting purposes and think of it like a bloodhound for uncovering issues that would otherwise have been missed. Let’s face it, on a big site errors can happen so easily, though Blocs is making that rarer.

Have you selected the option in Scrutiny to limit the crawl based on robots.txt? It’s the first option in the scope section of the settings. You could either set that in your robots.txt file on the server or in the page settings of Blocs. FWIW I just checked a sitemap produced in Scrutiny on a recent Blocs 4 site and it did not list the 404 page.

Regarding canonical links I have also added the header manually in the past, however it was prone to human error, so I was happy to see this tick box option included with Blocs 4. I apply this to every page and I am not quite sure why you could not do so with yours.

Be aware that Scrutiny will block a page from appearing in the sitemap if the canonical link was incorrect, however this would be highlighted in the report before export and shouldn’t be an issue now that Blocs can do this automatically. Selecting the canonical option is a real bonus for Seo.

I think @PeteSharp has addressed the clean url point and I always enable these. There is no Seo penalty and they just just look more professional. There are other advantages but we’d be going way off topic.

Regarding the dual sitemaps that might be one that @peahen is better qualified to answer but I would have thought you could combine the two by copy/paste and just place them at the root level. Logically if you produce two separate sites Blocs will create separate sitemaps if instructed.

@sandy For Volt if you deselect all the robot index options from the login page and avoid linking to it from elsewhere on the site that shouldn’t be indexed. Either like this shown below or leave all options deselected. I’ve just checked two sites and it works.

Because until now I added them manually and I wished to avoid mistakes. Another reason is that Google doesn’t require them or say “they must be included.” Google simply says, “it’s okay if you use them on all your web pages, especially if you use parameters.” I don’t use parameters or CMS.

What I would appreciate knowing is this, @peahen. I built my English & Japanese pages in two separate documents, the main sitemap.xml used at the root of my domain excludes the /en (English) URLs. I then put a different sitemap.xml at the /en directory root to list out the English pages only, excluding the Japanese. Not sure if this is the best way to do it for SEO, but that’s the way I’ve been doing it for a long time.

Would you just use 1 sitemap.xml at the root level of your domain and expect that to cover multiple languages that are separated by directory? Or is my method of having a Japanese web page sitemap at the root and a separate sitemap covering only the English pages in /en a reasonable way to go about sitemaps?

thanks @Flashman this is exacly how i just did it, after reading this with the SEO option, THANKS aswell!

Having not made a multi language site, I am curious about this. So you submit both site maps to Google I assume?

Yes.

Not sure why those dates are so old. I did originally submit the English one back in 2019, I guess, but can I assume that Google keeps checking those URLs for updates to the Sitemap so I don’t need to resubmit all the time?

This is the part that is beyond my direct experience given your site structure. Assuming you can reach any part of the two combined sites through links I would think it OK to combine both site maps as a single document at the root level, but first perhaps, just check to see if your pages are currently being indexed and whether this actually needs to be changed. There is no point in looking to resolve a problem if it doesn’t exist. This might be something that Scrutiny could handle as a single task.

Right. They are indexed now, so there is technically no problem. But there could be a problem and Google is just working around it. That is really why I asked.

Just make sure you don’t have a link to the login page elsewhere on your site and you should be good. I also make a point of calling the login page something random like a place name, rather than calling it admin or login. Volt as about as secure as it gets for a CMS if you follow a couple sensible measures.