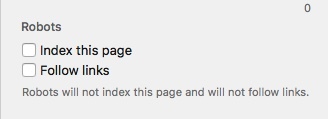

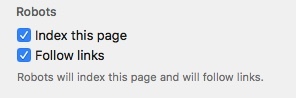

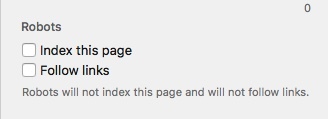

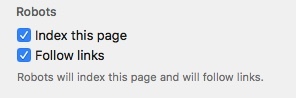

Perhaps I have missed something but I’d like an option to control settings for search robots within Blocs and this would ideally be on a page by page basis. Something like you see in the attachments would be simple to manage within the page settings menu.

As you can see from the screenshots, each variation clearly explains how the robots should treat each page. This would remove the need to create separate robot files and edit them on the server.

This seems like more work than a robots.txt file in the site’s root.

http://www.robotstxt.org/robotstxt.html

With a robots.txt file in the root on the server you have to remember to change it as the site changes and out of sight is often out of mind. Having it in front of you as you edit pages means you are unlikely to forget. Newer users may not even know they should have a robots file.

We’ll have to disagree I guess. The thought of 20+ mouse clicks to update 4 or 5 pages versus typing 4 or 5 half lines of text seems nightmarish to me. Not to mention being able to see at a glance what pages you have disallowed rather than opening up every page’s settings dialog to verify.

But if you really want to do this on a page by page basis, you could just add the robots meta tag in the page setting’s head section.

This was a issue I had with Freeway where it would take 97 mouse clicks to edit something that I could easily change in a couple of text edits.

I would love to see a components feature in Blocs much like GoLive had back in the day… where you could define the contents of a component and apply it to multiple pages. Then just edit a component to apply the changes across every page it was assigned to.

@ScottinPollock wow, GoLive! Now there’s a blast from the past…the good ole days. I was so pissed when Adobe pulled the plug on it.

I normally create a meta tags for the head section in each page. Once one is complete it’s a simple copy and paste.

Just used to doing it that way.

Casey

Why make it so complicated? Most people want all their pages indexed and followed, but with the flexibility to disable these options for specific pages.

Simply set pages to index and follow by default, so in 90% of cases you’ll never need to touch a thing. Then when a time comes that you don’t want a page indexed/followed you deselect the option. Takes about 1 second for a page.

Well… maybe I’m different. I usually have a number of pages on site that I don’t want indexed, sometimes entire directories. They could be just redirects for legacy urls, or pages I just don’t want users to access directly from a search engine. Pretty common stuff IMHO.

My point is, if this can be easily managed externally, and from tags in the page settings interface, why do we need yet another interface element for it?

Anyway, I won’t argue this any further… I just think that if interface elements are to be added, they should be added for things where multiple methods don’t already exist.

I agree it’s not worth arguing about and just down to personal opinion. I have a domain reserved purely for testing with Rapidweaver, where nothing is indexed and I simply apply the meta tag on a site wide basis. My guess is that most Blocs users might be a bit reluctant to mess around with text files on the server and the same goes for .htaccess.

Like yourself I am a former Freeway user (who isn’t these days) and I’m surprised it lasted as long as it did. The underlying code was ancient and I always wanted Softpress to start from scratch with something completely new like Blocs, but it never happened.

Yeah… that was never gonna happen, but not entirely their fault. Apple promised a lot with Carbon, and many devs took the bait only to find out years down the road it would be a dead end.

I think Blocs shows they could have started something fresh, but for whatever reason they were never prepared to take that step. I started with FW4 and ended with FW6 after it became apparent they were never going to change.

Well they couldn’t exactly start fresh with many users with many more site files and plugins. Those would need to be supported going forward and that could be a lot harder than just starting from scratch.

But as you say… after seeing how little had changed in versions 6 and 7, it seemed pretty clear they did not have the bandwidth for a major rewrite.